EMOTION ANALYSIS#

Run on Google Colab Run on Google Colab

|

View source on GitHub View source on GitHub

|

Download notebook Download notebook

|

Connect to EvaDB#

%pip install --quiet "evadb[vision,notebook]"

%pip install --quiet facenet_pytorch

import evadb

cursor = evadb.connect().cursor()

Note: you may need to restart the kernel to use updated packages.

Note: you may need to restart the kernel to use updated packages.

Download Video#

# A video of a happy person

!wget -nc "https://www.dropbox.com/s/gzfhwmib7u804zy/defhappy.mp4?raw=1" -O defhappy.mp4

# Adding Emotion detection

!wget -nc https://raw.githubusercontent.com/georgia-tech-db/eva/master/evadb/udfs/emotion_detector.py

# Adding Face Detector

!wget -nc https://raw.githubusercontent.com/georgia-tech-db/eva/master/evadb/udfs/face_detector.py

--2023-06-17 00:21:00-- https://www.dropbox.com/s/gzfhwmib7u804zy/defhappy.mp4?raw=1

Resolving www.dropbox.com (www.dropbox.com)... 162.125.9.18, 2620:100:601f:18::a27d:912

Connecting to www.dropbox.com (www.dropbox.com)|162.125.9.18|:443... connected.

HTTP request sent, awaiting response... 302 Found

Location: /s/raw/gzfhwmib7u804zy/defhappy.mp4 [following]

--2023-06-17 00:21:00-- https://www.dropbox.com/s/raw/gzfhwmib7u804zy/defhappy.mp4

Reusing existing connection to www.dropbox.com:443.

HTTP request sent, awaiting response... 302 Found

Location: https://ucdb88e4f7cbe6b7fcce2afc862e.dl.dropboxusercontent.com/cd/0/inline/B-IdFWkGulxDd5XDfmkDmrgSyuBLmJALJZOejL8n7U0pq8hL006_iYe-Xoz6IzwXZCFF0cGSeeldLr0zjsMAKVWNeXc-v3c9V_t-GYMzeb7RNu6gGi3QH9eEFCii811R_z3JehT0M1_YMiqVMHIekrYju7uZFcccji1SUoekefqnRA/file# [following]

--2023-06-17 00:21:00-- https://ucdb88e4f7cbe6b7fcce2afc862e.dl.dropboxusercontent.com/cd/0/inline/B-IdFWkGulxDd5XDfmkDmrgSyuBLmJALJZOejL8n7U0pq8hL006_iYe-Xoz6IzwXZCFF0cGSeeldLr0zjsMAKVWNeXc-v3c9V_t-GYMzeb7RNu6gGi3QH9eEFCii811R_z3JehT0M1_YMiqVMHIekrYju7uZFcccji1SUoekefqnRA/file

Resolving ucdb88e4f7cbe6b7fcce2afc862e.dl.dropboxusercontent.com (ucdb88e4f7cbe6b7fcce2afc862e.dl.dropboxusercontent.com)... 162.125.9.15, 2620:100:601f:15::a27d:90f

Connecting to ucdb88e4f7cbe6b7fcce2afc862e.dl.dropboxusercontent.com (ucdb88e4f7cbe6b7fcce2afc862e.dl.dropboxusercontent.com)|162.125.9.15|:443... connected.

HTTP request sent, awaiting response... 200 OK

Length: 2699034 (2.6M) [video/mp4]

Saving to: 'defhappy.mp4'

defhappy.mp4 100%[===================>] 2.57M --.-KB/s in 0.02s

2023-06-17 00:21:01 (145 MB/s) - 'defhappy.mp4' saved [2699034/2699034]

File 'emotion_detector.py' already there; not retrieving.

File 'face_detector.py' already there; not retrieving.

Load video for analysis#

response = cursor.query("DROP TABLE IF EXISTS HAPPY;").df()

print(response)

cursor.load(file_regex="defhappy.mp4", table_name="HAPPY", format="VIDEO").df()

06-17-2023 00:21:02 WARNING[drop_object_executor:drop_object_executor.py:_handle_drop_table:0050] Table: HAPPY does not exist

0

0 Table: HAPPY does not exist

| 0 | |

|---|---|

| 0 | Number of loaded VIDEO: 1 |

Create a function for analyzing the frames#

cursor.query("""CREATE UDF IF NOT EXISTS EmotionDetector

INPUT (frame NDARRAY UINT8(3, ANYDIM, ANYDIM))

OUTPUT (labels NDARRAY STR(ANYDIM), scores NDARRAY FLOAT32(ANYDIM))

TYPE Classification IMPL 'emotion_detector.py';

""").df()

cursor.query("""CREATE UDF IF NOT EXISTS FaceDetector

INPUT (frame NDARRAY UINT8(3, ANYDIM, ANYDIM))

OUTPUT (bboxes NDARRAY FLOAT32(ANYDIM, 4),

scores NDARRAY FLOAT32(ANYDIM))

TYPE FaceDetection

IMPL 'face_detector.py';

""").df()

| 0 | |

|---|---|

| 0 | UDF FaceDetector already exists, nothing added. |

Run the Face Detection UDF on video#

query = cursor.table("HAPPY")

query = query.filter("id < 10")

query = query.select("id, FaceDetector(data)")

query.df()

2023-06-17 00:21:05,712 INFO worker.py:1625 -- Started a local Ray instance.

| happy.id | facedetector.bboxes | facedetector.scores | |

|---|---|---|---|

| 0 | 0 | [[502, 94, 762, 435], [238, 296, 325, 398]] | [0.99990165, 0.79820246] |

| 1 | 1 | [[501, 96, 763, 435]] | [0.999918] |

| 2 | 2 | [[504, 97, 766, 437]] | [0.9999138] |

| 3 | 3 | [[498, 90, 776, 446]] | [0.99996686] |

| 4 | 4 | [[496, 99, 767, 444]] | [0.9999982] |

| 5 | 5 | [[499, 87, 777, 448], [236, 305, 324, 407]] | [0.9999136, 0.8369736] |

| 6 | 6 | [[500, 89, 778, 449]] | [0.9999131] |

| 7 | 7 | [[501, 89, 781, 452]] | [0.9999124] |

| 8 | 8 | [[503, 90, 783, 450]] | [0.99994683] |

| 9 | 9 | [[508, 87, 786, 447]] | [0.999949] |

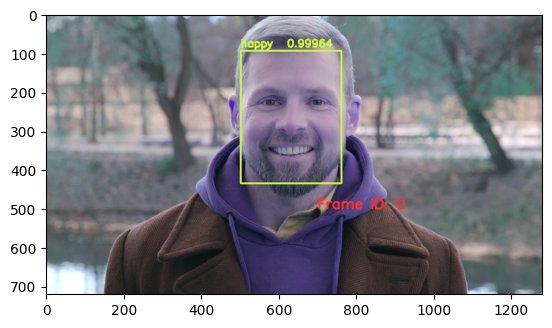

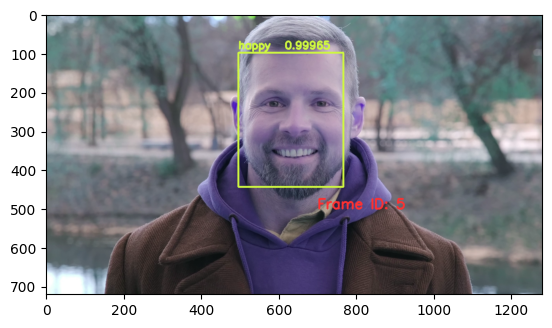

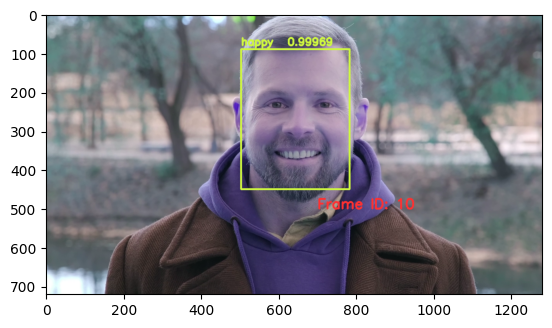

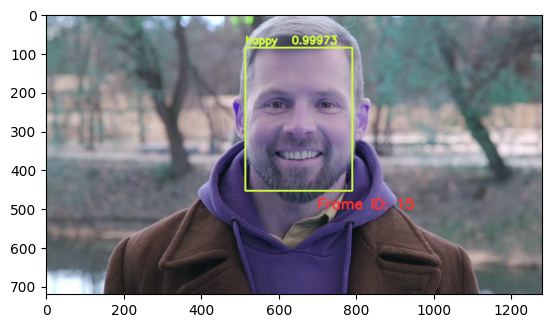

Run the Emotion Detection UDF on the outputs of the Face Detection UDF#

query = cursor.table("HAPPY")

query = query.cross_apply("UNNEST(FaceDetector(data))", "Face(bbox, conf)")

query = query.filter("id < 15")

query = query.select("id, bbox, EmotionDetector(Crop(data, bbox))")

response = query.df()

import cv2

from pprint import pprint

from matplotlib import pyplot as plt

def annotate_video(detections, input_video_path, output_video_path):

color1=(207, 248, 64)

color2=(255, 49, 49)

thickness=4

vcap = cv2.VideoCapture(input_video_path)

width = int(vcap.get(3))

height = int(vcap.get(4))

fps = vcap.get(5)

fourcc = cv2.VideoWriter_fourcc('m', 'p', '4', 'v') #codec

video=cv2.VideoWriter(output_video_path, fourcc, fps, (width,height))

frame_id = 0

# Capture frame-by-frame

# ret = 1 if the video is captured; frame is the image

ret, frame = vcap.read()

while ret:

df = detections

df = df[['Face.bbox', 'emotiondetector.labels', 'emotiondetector.scores']][df.index == frame_id]

if df.size:

x1, y1, x2, y2 = df['Face.bbox'].values[0]

label = df['emotiondetector.labels'].values[0]

score = df['emotiondetector.scores'].values[0]

x1, y1, x2, y2 = int(x1), int(y1), int(x2), int(y2)

# object bbox

frame=cv2.rectangle(frame, (x1, y1), (x2, y2), color1, thickness)

# object label

cv2.putText(frame, label, (x1, y1-10), cv2.FONT_HERSHEY_SIMPLEX, 0.9, color1, thickness)

# object score

cv2.putText(frame, str(round(score, 5)), (x1+120, y1-10), cv2.FONT_HERSHEY_SIMPLEX, 0.9, color1, thickness)

# frame label

cv2.putText(frame, 'Frame ID: ' + str(frame_id), (700, 500), cv2.FONT_HERSHEY_SIMPLEX, 1.2, color2, thickness)

video.write(frame)

# Show every fifth frame

if frame_id % 5 == 0:

plt.imshow(frame)

plt.show()

frame_id+=1

ret, frame = vcap.read()

video.release()

vcap.release()

from ipywidgets import Video, Image

input_path = 'defhappy.mp4'

output_path = 'video.mp4'

annotate_video(response, input_path, output_path)

Dropping an User-Defined Function (UDF)#

#cursor.drop(item_name="EmotionDetector", item_type="UDF").df()

#cursor.drop(item_name="FaceDetector", item_type="UDF").df()