MOVIE ANALYSIS#

Run on Google Colab Run on Google Colab

|

View source on GitHub View source on GitHub

|

Download notebook Download notebook

|

Start EVA Server#

We are reusing the start server notebook for launching the EVA server

!wget -nc "https://raw.githubusercontent.com/georgia-tech-db/eva/master/tutorials/00-start-eva-server.ipynb"

%run 00-start-eva-server.ipynb

cursor = connect_to_server()

File '00-start-eva-server.ipynb' already there; not retrieving.

[ -z "$(lsof -ti:5432)" ] || kill -9 $(lsof -ti:5432)

nohup eva_server > eva.log 2>&1 &

WARNING: Running pip as the 'root' user can result in broken permissions and conflicting behaviour with the system package manager. It is recommended to use a virtual environment instead: https://pip.pypa.io/warnings/venv

Note: you may need to restart the kernel to use updated packages.

Video Files#

getting some video files to test

# A video of a happy person

!wget -nc https://www.dropbox.com/s/gzfhwmib7u804zy/defhappy.mp4

# Adding Emotion detection

!wget -nc https://raw.githubusercontent.com/georgia-tech-db/eva/master/eva/udfs/emotion_detector.py

# Adding Face Detector

!wget -nc https://raw.githubusercontent.com/georgia-tech-db/eva/master/eva/udfs/face_detector.py

--2022-12-18 17:39:00-- https://www.dropbox.com/s/gzfhwmib7u804zy/defhappy.mp4

Resolving www.dropbox.com (www.dropbox.com)... 162.125.81.18, 2620:100:6031:18::a27d:5112, 2620:100:6031:18::a27d:5112

Connecting to www.dropbox.com (www.dropbox.com)|162.125.81.18|:443... connected.

HTTP request sent, awaiting response... 302 Found

Location: /s/raw/gzfhwmib7u804zy/defhappy.mp4 [following]

--2022-12-18 17:39:01-- https://www.dropbox.com/s/raw/gzfhwmib7u804zy/defhappy.mp4

Reusing existing connection to www.dropbox.com:443.

HTTP request sent, awaiting response... 302 Found

Location: https://ucb58bff9e7d777abe691a3f348e.dl.dropboxusercontent.com/cd/0/inline/By2iKFVkUD3x0OHMwghV_MV6nSCjCnh4YRS-cszetNJ6rXf297c4p7hWMYcPPDTsFRIfr0QuuxUCP-9v2n7PNsTLUnXmA80kwVJqV2g2V1Rgslztow1Eb7cQr1RoolH5EgEz_ZePK45F2yHPaFuKzauAFxrDKY11qLQCFPqoh3W1fw/file# [following]

--2022-12-18 17:39:02-- https://ucb58bff9e7d777abe691a3f348e.dl.dropboxusercontent.com/cd/0/inline/By2iKFVkUD3x0OHMwghV_MV6nSCjCnh4YRS-cszetNJ6rXf297c4p7hWMYcPPDTsFRIfr0QuuxUCP-9v2n7PNsTLUnXmA80kwVJqV2g2V1Rgslztow1Eb7cQr1RoolH5EgEz_ZePK45F2yHPaFuKzauAFxrDKY11qLQCFPqoh3W1fw/file

Resolving ucb58bff9e7d777abe691a3f348e.dl.dropboxusercontent.com (ucb58bff9e7d777abe691a3f348e.dl.dropboxusercontent.com)... 162.125.81.15, 2620:100:6031:15::a27d:510f

Connecting to ucb58bff9e7d777abe691a3f348e.dl.dropboxusercontent.com (ucb58bff9e7d777abe691a3f348e.dl.dropboxusercontent.com)|162.125.81.15|:443... connected.

HTTP request sent, awaiting response... 200 OK

Length: 2699034 (2.6M) [video/mp4]

Saving to: 'defhappy.mp4'

defhappy.mp4 100%[===================>] 2.57M 817KB/s in 3.2s

2022-12-18 17:39:07 (817 KB/s) - 'defhappy.mp4' saved [2699034/2699034]

--2022-12-18 17:39:07-- https://raw.githubusercontent.com/georgia-tech-db/eva/master/eva/udfs/emotion_detector.py

Resolving raw.githubusercontent.com (raw.githubusercontent.com)... 185.199.111.133, 185.199.109.133, 185.199.108.133, ...

Connecting to raw.githubusercontent.com (raw.githubusercontent.com)|185.199.111.133|:443... connected.

HTTP request sent, awaiting response... 200 OK

Length: 5378 (5.3K) [text/plain]

Saving to: 'emotion_detector.py'

emotion_detector.py 100%[===================>] 5.25K --.-KB/s in 0.004s

2022-12-18 17:39:07 (1.42 MB/s) - 'emotion_detector.py' saved [5378/5378]

--2022-12-18 17:39:07-- https://raw.githubusercontent.com/georgia-tech-db/eva/master/eva/udfs/face_detector.py

Resolving raw.githubusercontent.com (raw.githubusercontent.com)... 185.199.111.133, 185.199.109.133, 185.199.108.133, ...

Connecting to raw.githubusercontent.com (raw.githubusercontent.com)|185.199.111.133|:443... connected.

HTTP request sent, awaiting response... 200 OK

Length: 2552 (2.5K) [text/plain]

Saving to: 'face_detector.py'

face_detector.py 100%[===================>] 2.49K --.-KB/s in 0s

2022-12-18 17:39:08 (29.4 MB/s) - 'face_detector.py' saved [2552/2552]

Adding the video file to EVADB for analysis#

cursor.execute('DROP TABLE HAPPY')

response = cursor.fetch_all()

print(response)

cursor.execute('LOAD VIDEO "defhappy.mp4" INTO HAPPY')

response = cursor.fetch_all()

print(response)

@status: ResponseStatus.FAIL

@batch:

None

@error: Table: HAPPY does not exist

@status: ResponseStatus.SUCCESS

@batch:

0

0 Number of loaded VIDEO: 1

@query_time: 0.08177788800003327

Visualize Video#

from IPython.display import Video

Video("defhappy.mp4", height=450, width=800, embed=True)

Create an user-defined function(UDF) for analyzing the frames#

cursor.execute("""CREATE UDF IF NOT EXISTS EmotionDetector

INPUT (frame NDARRAY UINT8(3, ANYDIM, ANYDIM))

OUTPUT (labels NDARRAY STR(ANYDIM), scores NDARRAY FLOAT32(ANYDIM))

TYPE Classification IMPL 'emotion_detector.py';

""")

response = cursor.fetch_all()

print(response)

cursor.execute("""CREATE UDF IF NOT EXISTS FaceDetector

INPUT (frame NDARRAY UINT8(3, ANYDIM, ANYDIM))

OUTPUT (bboxes NDARRAY FLOAT32(ANYDIM, 4),

scores NDARRAY FLOAT32(ANYDIM))

TYPE FaceDetection

IMPL 'face_detector.py';

""")

response = cursor.fetch_all()

print(response)

@status: ResponseStatus.SUCCESS

@batch:

0

0 UDF EmotionDetector successfully added to the database.

@query_time: 49.841968275999534

@status: ResponseStatus.SUCCESS

@batch:

0

0 UDF FaceDetector successfully added to the database.

@query_time: 0.09775035300026502

Run the Face Detection UDF on video#

cursor.execute("""SELECT id, FaceDetector(data)

FROM HAPPY WHERE id<10""")

response = cursor.fetch_all()

print(response)

@status: ResponseStatus.SUCCESS

@batch:

happy.id facedetector.bboxes facedetector.scores

0 0 [[493 89 769 441]] [0.9997701]

1 1 [[501 89 773 442]] [0.99984527]

2 2 [[503 92 773 444]] [0.9998871]

3 3 [[506 91 774 446]] [0.9994814]

4 4 [[508 93 777 448]] [0.99958366]

5 5 [[506 99 772 445]] [0.99950814]

6 6 [[508 98 774 450]] [0.999731]

7 7 [[512 98 781 451]] [0.9997571]

8 8 [[513 97 783 451]] [0.99983895]

9 9 [[514 98 784 452]] [0.9998286]

@query_time: 1.516428368000561

Run the Emotion Detection UDF on the outputs of the Face Detection UDF#

cursor.execute("""SELECT id, bbox, EmotionDetector(Crop(data, bbox))

FROM HAPPY JOIN LATERAL UNNEST(FaceDetector(data)) AS Face(bbox, conf)

WHERE id < 15;""")

response = cursor.fetch_all()

print(response)

@status: ResponseStatus.SUCCESS

@batch:

happy.id Face.bbox emotiondetector.labels \

0 0 [493, 89, 769, 441] happy

1 1 [501, 89, 773, 442] happy

2 2 [503, 92, 773, 444] happy

3 3 [506, 91, 774, 446] happy

4 4 [508, 93, 777, 448] happy

5 5 [506, 99, 772, 445] happy

6 6 [508, 98, 774, 450] happy

7 7 [512, 98, 781, 451] happy

8 8 [513, 97, 783, 451] happy

9 9 [514, 98, 784, 452] happy

10 10 [515, 97, 786, 452] happy

11 10 [50, 524, 120, 599] neutral

12 11 [513, 96, 784, 452] happy

13 12 [512, 95, 785, 453] happy

14 13 [512, 94, 785, 452] happy

15 14 [512, 93, 786, 453] happy

emotiondetector.scores

0 0.999640

1 0.999674

2 0.999696

3 0.999686

4 0.999690

5 0.999709

6 0.999732

7 0.999721

8 0.999709

9 0.999718

10 0.999701

11 0.998291

12 0.999687

13 0.999676

14 0.999639

15 0.999649

@query_time: 2.891812810999909

import cv2

from pprint import pprint

from matplotlib import pyplot as plt

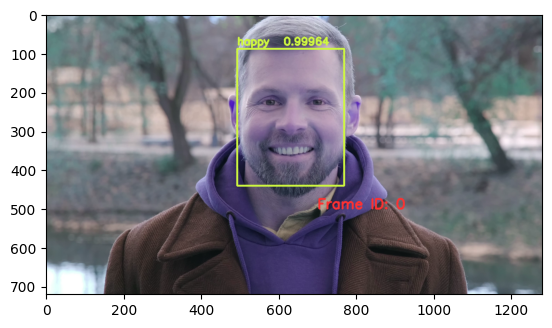

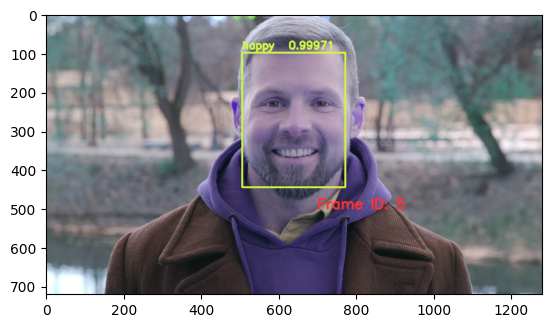

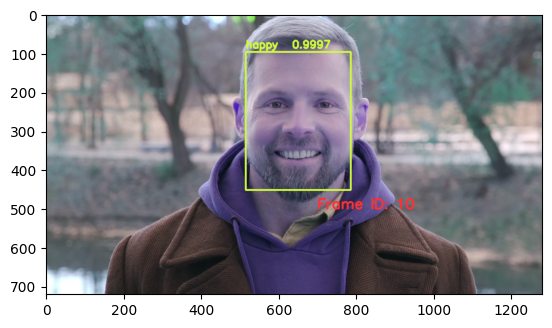

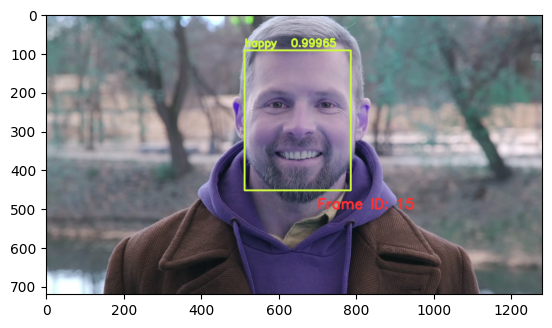

def annotate_video(detections, input_video_path, output_video_path):

color1=(207, 248, 64)

color2=(255, 49, 49)

thickness=4

vcap = cv2.VideoCapture(input_video_path)

width = int(vcap.get(3))

height = int(vcap.get(4))

fps = vcap.get(5)

fourcc = cv2.VideoWriter_fourcc('m', 'p', '4', 'v') #codec

video=cv2.VideoWriter(output_video_path, fourcc, fps, (width,height))

frame_id = 0

# Capture frame-by-frame

# ret = 1 if the video is captured; frame is the image

ret, frame = vcap.read()

while ret:

df = detections

df = df[['Face.bbox', 'emotiondetector.labels', 'emotiondetector.scores']][df.index == frame_id]

if df.size:

x1, y1, x2, y2 = df['Face.bbox'].values[0]

label = df['emotiondetector.labels'].values[0]

score = df['emotiondetector.scores'].values[0]

x1, y1, x2, y2 = int(x1), int(y1), int(x2), int(y2)

# object bbox

frame=cv2.rectangle(frame, (x1, y1), (x2, y2), color1, thickness)

# object label

cv2.putText(frame, label, (x1, y1-10), cv2.FONT_HERSHEY_SIMPLEX, 0.9, color1, thickness)

# object score

cv2.putText(frame, str(round(score, 5)), (x1+120, y1-10), cv2.FONT_HERSHEY_SIMPLEX, 0.9, color1, thickness)

# frame label

cv2.putText(frame, 'Frame ID: ' + str(frame_id), (700, 500), cv2.FONT_HERSHEY_SIMPLEX, 1.2, color2, thickness)

video.write(frame)

# Show every fifth frame

if frame_id % 5 == 0:

plt.imshow(frame)

plt.show()

frame_id+=1

ret, frame = vcap.read()

video.release()

vcap.release()

from ipywidgets import Video, Image

input_path = 'defhappy.mp4'

output_path = 'video.mp4'

dataframe = response.batch.frames

annotate_video(dataframe, input_path, output_path)