Object Detection Tutorial#

Run on Google Colab Run on Google Colab

|

View source on GitHub View source on GitHub

|

Download notebook Download notebook

|

Start EVA server#

We are reusing the start server notebook for launching the EVA server.

!wget -nc "https://raw.githubusercontent.com/georgia-tech-db/eva/master/tutorials/00-start-eva-server.ipynb"

%run 00-start-eva-server.ipynb

cursor = connect_to_server()

File '00-start-eva-server.ipynb' already there; not retrieving.

nohup eva_server > eva.log 2>&1 &

Note: you may need to restart the kernel to use updated packages.

Download the Videos#

# Getting the video files

!wget -nc https://www.dropbox.com/s/k00wge9exwkfxz6/ua_detrac.mp4?raw=1 -O ua_detrac.mp4

# Getting the Yolo object detector

!wget -nc https://raw.githubusercontent.com/georgia-tech-db/eva/master/eva/udfs/yolo_object_detector.py

File ‘ua_detrac.mp4’ already there; not retrieving.

File ‘yolo_object_detector.py’ already there; not retrieving.

Load the surveillance videos for analysis#

We use regular expression to load all the videos into the table#

cursor.execute('DROP TABLE ObjectDetectionVideos')

response = cursor.fetch_all()

print(response)

cursor.execute('LOAD VIDEO "ua_detrac.mp4" INTO ObjectDetectionVideos;')

response = cursor.fetch_all()

print(response)

@status: ResponseStatus.SUCCESS

@batch:

0

0 Table Successfully dropped: ObjectDetectionVideos

@query_time: 0.3437608620006358

@status: ResponseStatus.SUCCESS

@batch:

0

0 Number of loaded VIDEO: 1

@query_time: 1.8837439379994976

Visualize Video#

from IPython.display import Video

Video("ua_detrac.mp4", embed=True)

Register YOLO Object Detector an an User-Defined Function (UDF) in EVA#

cursor.execute("""CREATE UDF IF NOT EXISTS YoloV5

INPUT (frame NDARRAY UINT8(3, ANYDIM, ANYDIM))

OUTPUT (labels NDARRAY STR(ANYDIM), bboxes NDARRAY FLOAT32(ANYDIM, 4),

scores NDARRAY FLOAT32(ANYDIM))

TYPE Classification

IMPL 'yolo_object_detector.py';

""")

response = cursor.fetch_all()

print(response)

@status: ResponseStatus.SUCCESS

@batch:

0

0 UDF YoloV5 already exists, nothing added.

@query_time: 0.012568520000058925

Run Object Detector on the video#

cursor.execute("""SELECT id, YoloV5(data)

FROM ObjectDetectionVideos

WHERE id < 20""")

response = cursor.fetch_all()

response.as_df()

| objectdetectionvideos.id | yolov5.labels | yolov5.bboxes | yolov5.scores | |

|---|---|---|---|---|

| 0 | 0 | [car, car, car, car, car, car, car, car, car, ... | 0 [830.513916015625, 276.9407958984375, 96... | [0.9019389748573303, 0.8878239393234253, 0.854... |

| 1 | 1 | [car, car, car, car, car, car, car, car, car, ... | 0 [833.4142456054688, 277.4311828613281, 9... | [0.898006021976471, 0.8685559630393982, 0.8364... |

| 2 | 2 | [car, car, car, car, car, car, car, car, car, ... | 0 [837.3671875, 278.4643859863281, 960.0, ... | [0.8956703543663025, 0.8518660068511963, 0.848... |

| 3 | 3 | [car, car, car, car, car, car, car, car, car, ... | 0 [840.7059326171875, 279.1480712890625, 9... | [0.8803831338882446, 0.8661114573478699, 0.849... |

| 4 | 4 | [car, car, car, car, car, car, car, car, car, ... | 0 [623.8599243164062, 218.58824157714844, ... | [0.8975156545639038, 0.8809235692024231, 0.843... |

| 5 | 5 | [car, car, car, car, car, car, car, car, car, ... | 0 [624.7167358398438, 217.9913787841797, 7... | [0.9156808257102966, 0.8994483351707458, 0.846... |

| 6 | 6 | [car, car, car, car, car, car, car, car, perso... | 0 [625.336669921875, 217.91189575195312, 7... | [0.9120900630950928, 0.9068282842636108, 0.841... |

| 7 | 7 | [car, car, car, car, car, car, car, car, car, ... | 0 [626.2813110351562, 218.2920379638672, 7... | [0.9046719670295715, 0.8707321882247925, 0.845... |

| 8 | 8 | [car, car, car, car, car, car, car, car, car, ... | 0 [628.342041015625, 219.62632751464844, 7... | [0.8893280625343323, 0.8552687168121338, 0.853... |

| 9 | 9 | [car, car, car, car, car, car, car, car, car, ... | 0 [630.1256713867188, 221.2857666015625, 7... | [0.8876321911811829, 0.855767548084259, 0.8532... |

| 10 | 10 | [car, car, car, car, car, car, car, car, car, ... | 0 [632.1705932617188, 222.32717895507812, ... | [0.8991596102714539, 0.8353574872016907, 0.830... |

| 11 | 11 | [car, car, car, car, car, car, car, car, perso... | 0 [634.2802734375, 222.0661163330078, 747.... | [0.9085389971733093, 0.8469472527503967, 0.838... |

| 12 | 12 | [car, car, car, car, car, car, car, car, car, ... | 0 [636.1024169921875, 222.5532989501953, 7... | [0.9089045524597168, 0.8619462847709656, 0.854... |

| 13 | 13 | [car, car, car, car, car, car, car, car, car, ... | 0 [636.18408203125, 222.69625854492188, 75... | [0.8987026214599609, 0.88194340467453, 0.85846... |

| 14 | 14 | [car, car, car, car, car, car, car, person, ca... | 0 [638.7570190429688, 223.4717559814453, 7... | [0.8915325403213501, 0.8549790978431702, 0.854... |

| 15 | 15 | [car, car, car, car, car, car, car, person, ca... | 0 [641.4051513671875, 224.3956298828125, 7... | [0.8875617980957031, 0.8631888031959534, 0.847... |

| 16 | 16 | [car, car, car, car, car, car, person, car, ca... | 0 [644.232177734375, 225.8704833984375, 76... | [0.8969656825065613, 0.863024890422821, 0.8239... |

| 17 | 17 | [car, car, car, car, car, car, car, person, ca... | 0 [646.2791748046875, 225.99281311035156, ... | [0.8984966278076172, 0.8612021207809448, 0.834... |

| 18 | 18 | [car, car, car, car, car, car, car, car, car, ... | 0 [647.1568603515625, 226.51370239257812, ... | [0.8983429074287415, 0.8600167036056519, 0.840... |

| 19 | 19 | [car, car, car, car, car, car, car, car, car, ... | 0 [648.61767578125, 227.1198272705078, 768... | [0.8998422026634216, 0.8530007004737854, 0.848... |

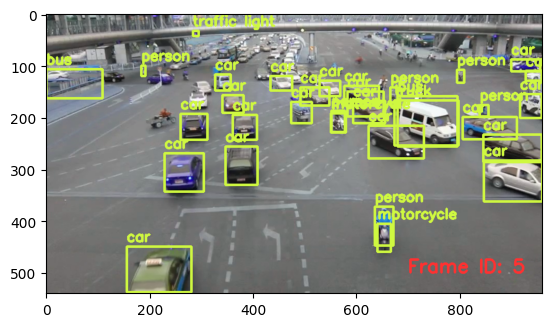

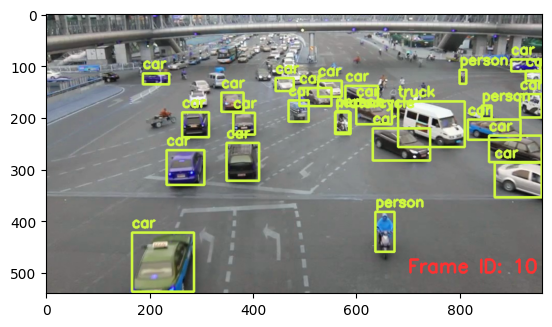

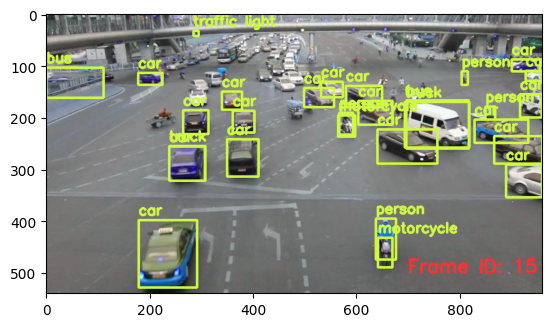

Visualizing output of the Object Detector on the video#

import cv2

from pprint import pprint

from matplotlib import pyplot as plt

def annotate_video(detections, input_video_path, output_video_path):

color1=(207, 248, 64)

color2=(255, 49, 49)

thickness=4

vcap = cv2.VideoCapture(input_video_path)

width = int(vcap.get(3))

height = int(vcap.get(4))

fps = vcap.get(5)

fourcc = cv2.VideoWriter_fourcc('m', 'p', '4', 'v') #codec

video=cv2.VideoWriter(output_video_path, fourcc, fps, (width,height))

frame_id = 0

# Capture frame-by-frame

# ret = 1 if the video is captured; frame is the image

ret, frame = vcap.read()

while ret:

df = detections

df = df[['yolov5.bboxes', 'yolov5.labels']][df.index == frame_id]

if df.size:

dfLst = df.values.tolist()

for bbox, label in zip(dfLst[0][0], dfLst[0][1]):

x1, y1, x2, y2 = bbox

x1, y1, x2, y2 = int(x1), int(y1), int(x2), int(y2)

# object bbox

frame=cv2.rectangle(frame, (x1, y1), (x2, y2), color1, thickness)

# object label

cv2.putText(frame, label, (x1, y1-10), cv2.FONT_HERSHEY_SIMPLEX, 0.9, color1, thickness)

# frame label

cv2.putText(frame, 'Frame ID: ' + str(frame_id), (700, 500), cv2.FONT_HERSHEY_SIMPLEX, 1.2, color2, thickness)

video.write(frame)

# Stop after twenty frames (id < 20 in previous query)

if frame_id == 20:

break

# Show every fifth frame

if frame_id % 5 == 0:

plt.imshow(frame)

plt.show()

frame_id+=1

ret, frame = vcap.read()

video.release()

vcap.release()

from ipywidgets import Video, Image

input_path = 'ua_detrac.mp4'

output_path = 'video.mp4'

dataframe = response.as_df()

annotate_video(dataframe, input_path, output_path)

Video.from_file(output_path)

Dropping an User-Defined Function (UDF)#

cursor.execute("DROP UDF YoloV5;")

response = cursor.fetch_all()

print(response)

@status: ResponseStatus.SUCCESS

@batch:

0

0 UDF YoloV5 successfully dropped

@query_time: 0.1606277789996966